Generative AI Governance

- Home

- Generative AI Governance

Generative AI Governance

Generative AI is a transformative force, pushing the boundaries of innovation. However, with great power comes great responsibility. The ethical implications of Generative AI necessitate the establishment of robust governance frameworks to guide its development and deployment.

According to McKinsey, generative AI models can add trillions of dollars to the global economy, demonstrating mindblowing successes in some areas while posing risks in others.

In the IAPP-EY Annual Privacy Governance Report of 2022, AI governance was the ninth most crucial strategic priority for privacy functions. However, in 2023, it has surged to the second spot. The rapid ascent of AI to the forefront is understandable, considering that 57% of privacy functions have taken on added responsibilities for governing AI.

Artificial Intelligence (AI) represents a new frontier in human development, rapidly shaping our world and influencing socioeconomic progress globally. The governance of AI emerges as a shared responsibility among nations, holding significant implications for the future of humanity.

What is Generative AI Governance?

Generative AI Governance refers to the principles, policies, and practices designed to ensure the responsible and ethical use of generative AI technologies. Understanding generative AI basics is crucial in grappling with the unique challenges posed by AI systems that can generate creative outputs autonomously. It involves defining standards, establishing guidelines, and implementing controls to steer the development and deployment of generative algorithms.

The dynamic field of artificial intelligence (AI) is evolving rapidly, permeating diverse sectors and industries. However, this widespread adoption has led to a fragmentation of terminology, leaving business, technology, and government professionals without a standardized lexicon and shared understanding of AI governance concepts.

Importance of Generative AI Governance

In any market, the significance of well-crafted rules is foundational to unlocking its potential. This holds especially true for generative AI, where the absence of clear regulatory conditions can lead to seismic risks.

Although Europe is set to be the first region to implement broad AI governance legislation in the form of the EU AI Act, the imperative for effective governance is not just a European concern but a global one.

Apply Cyber GRC to your organization today

Applications of Generative AI

Generative AI’s crowning feature, pre-trained language processors (such as ChatGPT), offers various industry applications. It revolutionizes how tasks are automated, human capabilities are augmented, and business and IT processes are autonomously executed.

A List of Generative AI Applications

1. Content Generation

Generative AI powers automated content creation, including articles, reports, and creative pieces.

2. Design and Creativity

Generative AI creates unique visual, graphics, and artistic elements in design.

3. Language Translation

Generative models enhance language translation services, enabling more accurate

and context-aware interpretations

4. Code Generation and Programming

Automating code generation and generative AI assists developers in accelerating

software development processes.

5. Data Augmentation

Generative AI is utilized to augment datasets for machine learning models, enhancing the robustness and generalization of AI algorithms.

6. Virtual Assistants and Chatbots

Generative AI enables natural language interactions with users by powering virtual assistants and chatbots.

State of Generative AI Usage

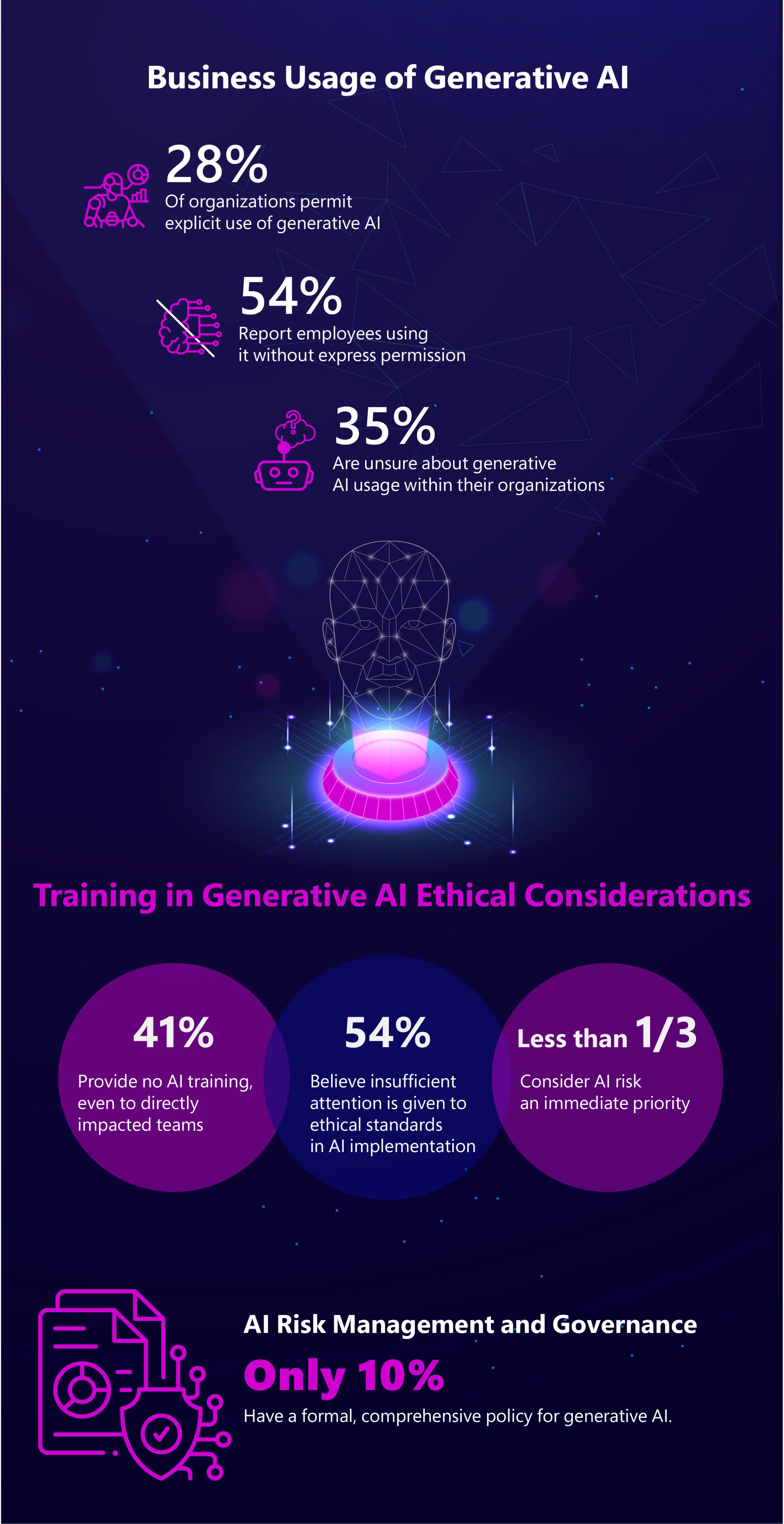

In a comprehensive survey conducted by ISACA, over 2,300 professionals specializing in audit, risk, security, data privacy, and IT governance shared their perspectives on the present landscape of generative AI.

Lessons Learned: Generative AI usage is increasing, but governance and oversight lag sorely behind.

Business Usage of Generative AI

28% of organizations permit explicit use of generative AI.

54% report employees using it without express permission.

35% are unsure about generative AI usage within their organizations.

Training in Generative AI Ethical Considerations

41% provide no AI training, even to directly impacted teams.

54% believe insufficient attention is given to ethical standards in AI implementation.

Less than one-third consider AI risk an immediate priority.

AI Risk Management and Governance

Only 10% have a formal, comprehensive policy for generative AI.

Source: ISACA 2023 Survey. Learn more at isaca.org/resources/artificial-intelligence.

Statistics Highlighting GEN-AI Applications

A recent Gartner survey projected that by 2025, approximately 80% of customer service and support organizations are expected to integrate generative AI technology in various capacities to enhance agent productivity and customer experience.

According to Gartner, generative AI will predominantly be leveraged for content creation, AI-supported chatbots, and the automation of human tasks. Its most profound impact is expected to be on customer experience, as revealed by the survey above, where 38% of leaders identified improving customer experience and retention as the primary purpose of deploying applications based on large language models.

Ethics in Generative AI

The exponential growth of generative AI brings unprecedented possibilities, accompanied by a tapestry of challenges related to the ethics of generative AI that demand careful consideration. As this transformative technology permeates various aspects of our lives, it introduces dilemmas related to data governance generative AI, intellectual property, bias mitigation, and the responsible use of AI-generated content.

The landscape around the ethics of generative AI governance is complex, requiring organizations to strike a delicate balance between harnessing innovation and upholding ethical principles.

Ethical Challenges in Generative AI Governance

Generative AI, often called the “wild west” of technological frontiers, presents a rush of opportunities accompanied by ethical intricacies.

Despite the broad acknowledgment of these ethical risks, translating ethical principles into operational practices remains challenging.

Privacy and Data Integrity

Using generative AI, particularly in training foundation models, raises concerns about data privacy. The sheer volume of data required for training, often sourced from diverse and extensive datasets, may clash with principles of individual consent mandated by regulations like the General Data Protection Regulation (GDPR). Balancing the need for extensive data with the rights of individuals poses a significant ethical dilemma.

Bias and Fairness

Generative AI systems, driven by foundation models, can inherit biases in the training data. Whether it’s biases related to gender, race, or other characteristics, the challenge lies in mitigating these biases to ensure fair and equitable outcomes. Regulators will likely demand transparency and accountability in addressing biases, requiring organizations to implement measures that minimize the impact of biases in AI-generated content.

Copyright Usage

Using copyrighted content in training generative AI models introduces ethical considerations. Striking a balance between the need for training data and respecting copyright regulations becomes crucial. Clarity on using copyright-protected material and adherence to legal frameworks are essential aspects of ethical generative AI governance.

Other Challenges and Risks in Generative AI

Risk 1: Intellectual Property and Data Leakage

Enterprises venturing into generative AI confront a critical hurdle involving the potential leakage of intellectual property (IP) and data. The accessibility of web- or app-based AI tools may inadvertently lead to shadow IT, risking the processing of sensitive data outside secure channels.

To counter this, it is imperative to restrict access to IP. Measures like utilizing VPNs for secure data transmission and implementing tools like Digital Rights Management (DRM) can help mitigate these risks. OpenAI also allows users to opt out of data sharing with ChatGPT, fortifying confidentiality, data governance and generative AI.

Risk 2: Vulnerability to Security Breaches

Generative AI’s reliance on large datasets exposes significant privacy and security risks. Recent incidents, such as the breach involving OpenAI’s ChatGPT, underscore vulnerabilities in data governance and generative AI. Privacy breaches and potential misuse by malicious actors for creating deepfakes or spreading misinformation highlight the need for robust cybersecurity infrastructure in AI models.

Strengthening data protection measures is critical to safeguard against potential cyberattacks.

Risk 3: Unintentional Use of Copyrighted Data

Enterprises utilizing generative AI face the risk of unintentionally using copyrighted data, leading to legal complications.

Mitigating this risk involves prioritizing first-party data, ensuring proper attribution and compensation for third-party data, and implementing efficient data management protocols within the enterprise.

Risk 4: Dependency on External Platforms

Enterprises adopting generative AI face challenges associated with dependence on third-party platforms.

Legal safeguards, such as non-disclosure agreements (NDAs), are crucial in mitigating sudden AI model changes or discontinuation risks. These agreements protect confidential business information and provide legal recourse in the event of breaches, offering a strategic approach to navigate dependencies on external

AI platforms.

Risk 5: Malicious use of generative AI content

When wielded by ill-intent individuals, generative AI becomes a potent instrument for causing harm. Instances of misuse include the creation of deceptive elements such as counterfeit reviews, fraudulent schemes, and various online scams. Additionally, generative AI is capable of automating the generation of spam messages and other unwarranted communications.

Developing a Generative AI Governance Strategy

Many nations have yet to translate words into action amid global AI regulation discussions. As organizations intensify their efforts to develop AI governance software and tools, the need for governance structures that manage risks without hindering innovation has become paramount.

However, challenges arise—how can organizations construct governance structures that mitigate risks, foster innovation, and adapt to future regulations?

While there’s no one-size-fits-all solution, the following foundational steps provide insights for organizations navigating the complexities of generative AI.

Step 1: Clearly Define the Governance Focus

Establishing a robust AI governance program begins with a clear focus. Whether it’s developing new AI systems or evaluating third-party implementations, the approach significantly differs.

For organizations developing new AI systems, the focus should be on designing secure AI governance software solutions. This involves incorporating privacy by design, ethical guidelines, and strategies to eliminate biases. Comprehensive documentation ensures accountability and transparency without revealing proprietary information.

Step 2: Map AI Systems in Use

Mapping AI systems is crucial for understanding and refining use policies. This process helps identify potential mitigation measures, aligning governance with organizational goals. Crucial information includes system type, owners, users, data sources, dependencies, access points, and functional descriptions.

Step 3: Define or Design Framework for AI Governance

Before selecting AI governance frameworks, it’s crucial to identify users—those handling development or implementation and those overseeing governance. Stakeholders guide the choice of frameworks, with some models catering to developers or governance professionals. Depending on the scope and utility of AI systems, organizations may need to combine multiple frameworks, each focusing on different aspects of the AI lifecycle or specific features.

Looking Ahead: AI Regulatory Influence in 2024

In 2024, the EU is poised to set the stage for comprehensive regulation of generative AI. Two key regulations, the General Data Protection Regulation (GDPR) and the anticipated EU AI Act (AIA), are expected to play a central role in influencing European markets and serving as a benchmark for global governance.

Beyond Europe, the increased adoption of Generative AI has prompted widespread regulatory responses globally. Notable examples include the National Institute of Standards and Technology (NIST), which has introduced an AI Risk Management Framework.

Additionally, HITRUST has released the latest version of the Common Security Framework (CSF v11.2.0), now encompassing areas specifically addressing AI risk management.

These guidelines and frameworks provide valuable assistance to enterprises. Still, they must be revised to address fully the ethical and legal implications and AI regulatory compliance associated with Generative AI, even as they foster innovation.

Source: IBM Global AI Adoption Index 2023

Comparing EU and U.S. Approaches to AI Regulation

Artificial Intelligence (AI) regulation has become a focal point for global discussions on technology governance, with the European Union (EU) and the United States (U.S.) adopting distinctive approaches. While the EU emphasizes a categorical legal framework through the AI Act, the U.S. leans towards a risk management approach, primarily exemplified by the National Institute of Standards and Technology (NIST) AI Risk Management Framework.

In the European Union (EU), the AI Act has progressed to the final stages as of June 2023, where the final version is subject to debate. The expected passage of the act is anticipated in early 2024.

The discussions involve three key positions presented by the commission, council, and European Parliament. The proposals under consideration cover a range of measures, including prohibiting certain types of AI systems.

Additionally, there are discussions on classifying high-risk AI systems, delegating regulatory and enforcement authorities, and prescribing standards for conformity.

Takeaway: The EU envisions a comprehensive regulatory framework to govern AI effectively.

The United States lacks a comprehensive AI regulation but has various frameworks and guidelines. Congress has passed legislation to maintain U.S. leadership in AI research and development, focusing on controlling government use of AI.

In May 2023, the Biden administration updated the National AI Research and Development Strategic Plan, emphasizing a principled and coordinated approach to international collaboration in AI research.

Various executive orders, such as “Maintaining American Leadership in AI” and “Promoting the Use of Trustworthy AI in the Federal Government,” highlight the commitment to AI development and responsible usage. There are also acts and bills like the AI Training Act, National AI Initiative Act, AI in Government Act, and the Algorithmic Accountability Act, among others.

Takeaway: The U.S. approach to AI governance is characterized by incremental progress, prioritizing preserving civil and human rights during AI deployment and fostering international collaboration aligned with

democratic values.

Apply Cyber GRC to your organization today

What’s Not in There?

The crux of effective AI governance lies in what regulatory frameworks explicitly contain and, more importantly, in what they leave room for.

The most important part of the (EU) AI Act is what is not there

Andrea Renda, Senior Research Fellow and Head of the CEPS Unit on Global Governance,

Regulation, Innovation and the Digital Economy

As the digital landscape, propelled by the relentless advance of artificial intelligence, undergoes rapid transformation, regulatory structures must grapple with the challenge of remaining relevant and adaptive. This inherent dynamism necessitates a governance system that can evolve with the technology it seeks to regulate.

In recognizing the limitations of codifying every eventuality within the legislation, the emphasis must shift to creating a framework that can accommodate unforeseen developments and hypothetical scenarios. The absence of overly prescriptive measures becomes an intentional strategy, allowing the governance structure to be flexible, responsive, and forward-thinking.

As governments and entities worldwide struggle with the challenge of reigning in on the AI trajectory, the focus should be establishing a regulatory environment that can learn, adapt, and refine itself in sync with the progression of AI technology.